Reward-Driven Automated Curriculum Learning for Interaction-Aware Self-Driving at Unsignalized Intersections

Published in International Conference on Intelligent Robots and Systems (IROS) · IROS 2024. 14 October- 18 October 2024 | Abu Dhabi, UAE, 2024

Abstract

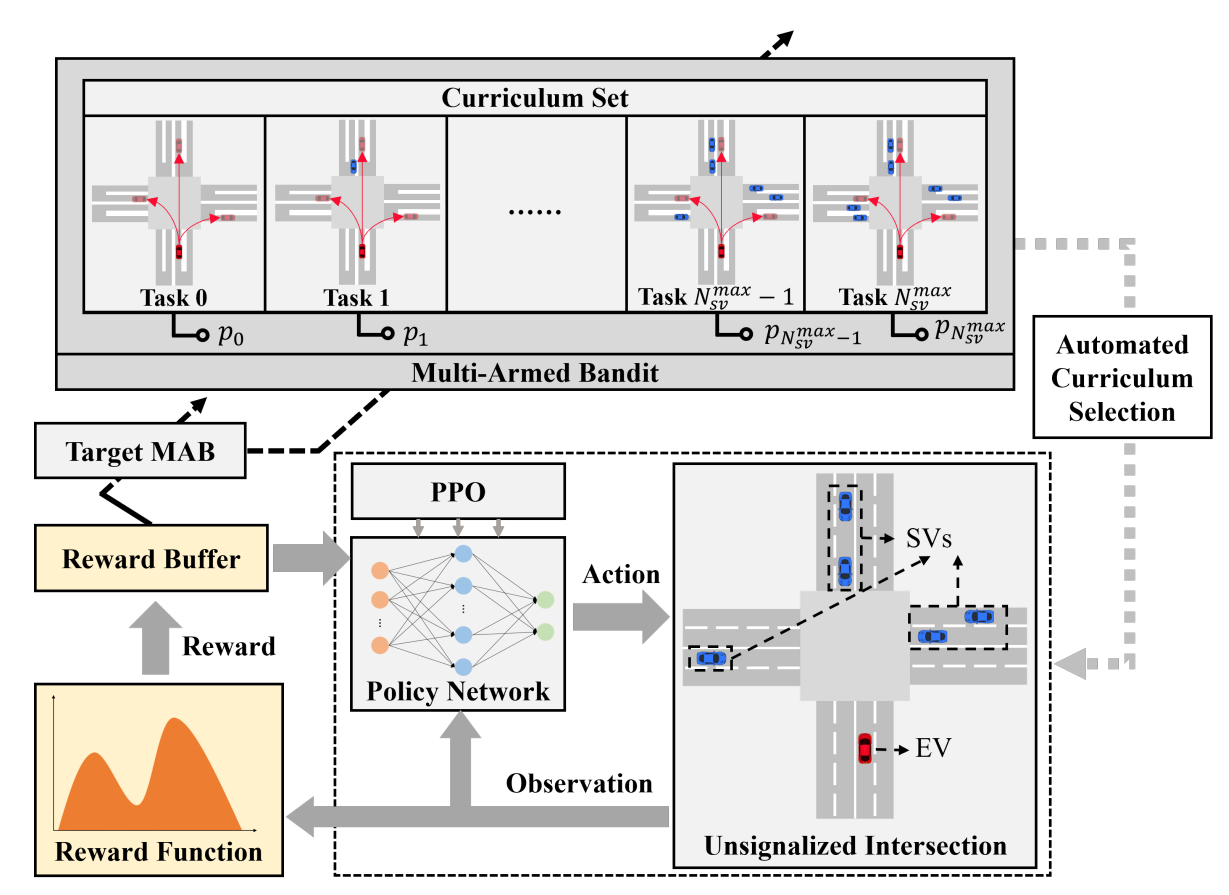

In this work, we present a reward-driven automated curriculum reinforcement learning approach for interaction-aware self-driving at unsignalized intersections, taking into account the uncertainties associated with surrounding vehicles (SVs). These uncertainties encompass the uncertainty of SVs’ driving intention and also the quantity of SVs. To deal with this problem, the curriculum set is specifically designed to accommodate a progressively increasing number of SVs. By implementing an automated curriculum selection mechanism, the importance weights are rationally allocated across various curricula, thereby facilitating improved sample efficiency and training outcomes. Furthermore, the reward function is meticulously designed to guide the agent towards effective policy exploration. Thus the proposed framework could proactively address the above uncertainties at unsignalized intersections by employing the automated curriculum learning technique that progressively increases task difficulty, and this ensures safe self-driving through effective interaction with SVs. Comparative experiments are conducted in Highway-Env, and the results indicate that our approach achieves the highest task success rate, attains strong robustness to initialization parameters of the curriculum selection module, and exhibits superior adaptability to diverse situational configurations at unsignalized intersections. Furthermore, the effectiveness of the proposed method is validated using the high-fidelity CARLA simulator.

Overview

Citation

@article{RN17,

title=,

author={Peng, Zengqi and Zhou, Xiao and Zheng, Lei and Wang, Yubin and Ma, Jun},

journal={arXiv preprint arXiv:2403.13674},

year={2024}

}